Hello, World!

Welcome to our 36th edition of Hold the Code. In this edition, we cover Twitter’s crackdown on accounts associated Russian government and LinkedIn’s problem with fake profiles. Our weekly feature delves into the issues surrounding AI and trust.

As always, happy reading!

Twitter Bully

Twitter has restricted content from over 300 accounts associated with the Russian government on their platform, including the account belonging to Vladimir Putin. These accounts will no longer be promoted in timelines or notifications on Twitter with these new changes.

The Bird’s Word

Twitter states that they will take action against any country that “restricts access to open internet while they’re engaged in armed conflict.”

"When a government that's engaged in armed conflict is blocking or limiting access to online services within their country, while they themselves continue to use those same services to advance their positions and viewpoints -- that creates a harmful information imbalance", says Yoel Roth, Head of Site Integrity at Twitter.

Moderating Misinformation

Accounts associated with the Russian government have been criticized recently for spreading misinformation during the Ukraine war. On February 28th (four days after the invasion of Ukraine), Twitter moved to restrict accounts associated with Russian state-affiliated media groups, which led to a 30% reduction in the reach of this content. However, the platform didn’t touch Russian government accounts. This created a “loophole” in Twitter’s moderation policies according to Tim Graham, an analyst at the QUT Digital Media Research Centre, and allowed misinformation to continue spreading from government sources.

Not a Tweet-for-Tat

Twitter is not banned in Russia, but the platform has been severely limited in the country since the war in Ukraine began. Twitter maintains that this move is not retaliatory and the new rules will apply to any country that restrictions online activities while engaging in interstate warfare.

Catching LinkedIn Imposters

Have you ever scrolled through LinkedIn and seen a profile picture that just looked a little bit too perfect? Maybe a bit eerie, like something seems off about it and you’re not quite sure what. If so, you’re not alone and you have good reason. Researchers Renée DiResta and her colleague Josh Goldstein at the Stanford Internet Observatory have discovered over a thousand LinkedIn profiles using what appear to be artificially generated images. What first tipped DiResta off that this could be the case was when, upon looking a bit closer at the sender of a message she received, she noticed something odd. The profile picture that appeared to just be a standard corporate headshot had anomalies, such as hair disappearing and later reappearing, an earring only on one side, eyes aligned perfectly in the middle of the image, and an entirely blurred and nondescript background. To the eyes of a trained disinformation researcher, “The face jumped out at me as being fake.” Further investigation revealed that the companies listed in their experience had no record of them ever working there.

Why are They Here?

While the phenomena of fake accounts appearing with the goal of spreading misinformation has been unfortunately common over the past half decade, the purposes of these accounts appear to be more mild, with the primary aim of creating automated sales agents that can pose as a real person when messaging other LinkedIn users. If the potential customer chose to respond, an actual sales employee would then step in to talk with them. In many cases, companies for whom the fake accounts were advertising had no idea that these tactics were being employed, claiming they had hired outside companies to advertise their products and knew nothing beyond that. In response to the investigation, LinkedIn removed all profiles that they concluded were fake, stating that according to policy, every account must represent a real person.

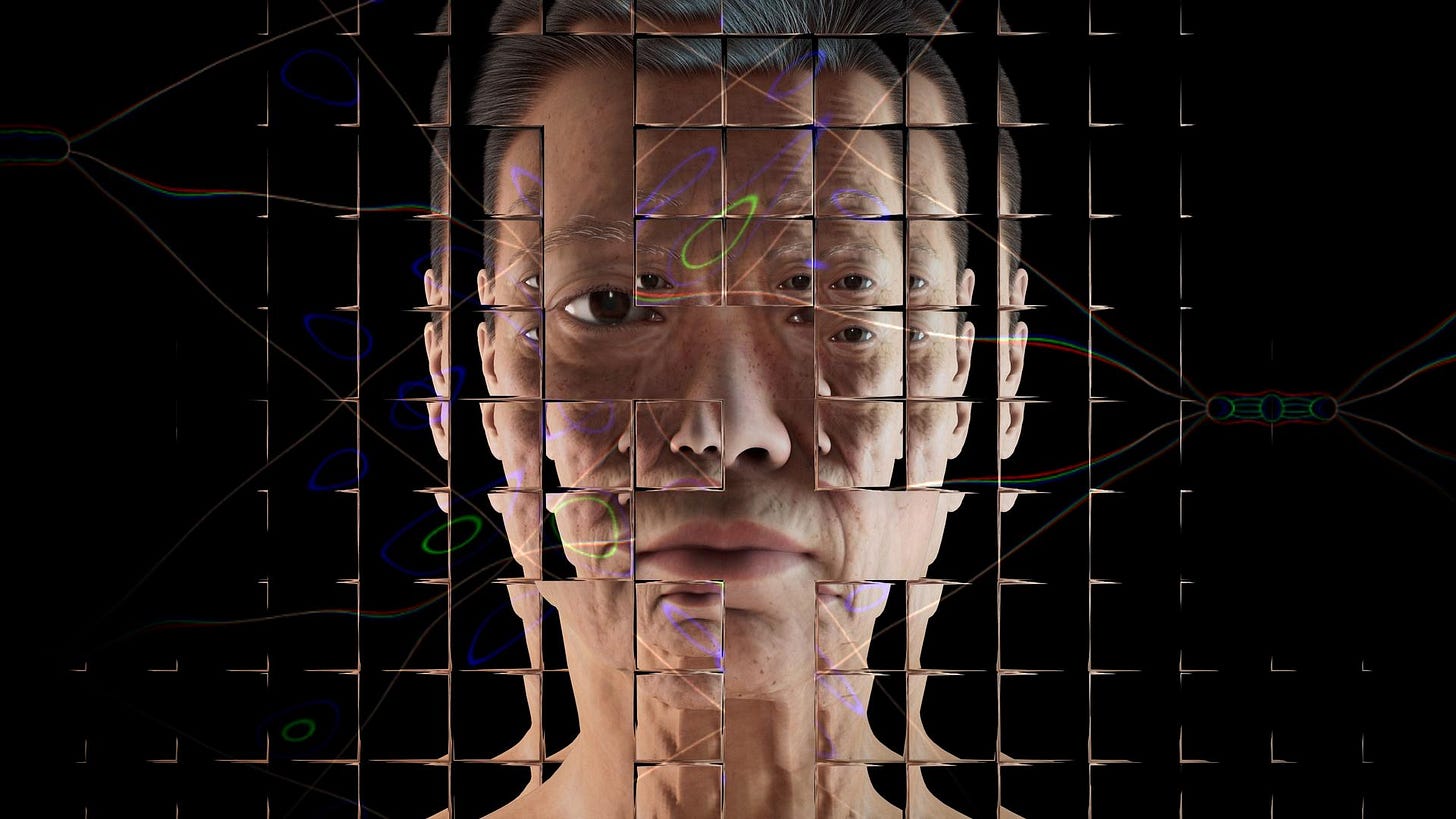

Weekly Feature: Trust Me, I’m an Algorithm

Trust is a complicated concept. It can take a while for trust to be gained, and it can be very quickly lost, in human to human relationships of course, but also when humans interact with new technologies. Just one bad experience can break down a relationship that has taken months or even years to build. Artificial Intelligence is especially vulnerable to this, according to leading venture capitalist Spiros Margaris, who works in the fintech (financial technology) space.

Numbers don’t lie

The public’s opinion on artificial intelligence varies depending on a few factors:

Whether or not they are a business decision maker or owner (62% and 61% respectively)

How wealthy they are (57% for the affluent)

Their education level (56% for those with a higher-education degree versus 45% for those without)

Their age (44% for those 50 and older)

So, how do we improve the trust people have in AI? It might be a bit tricky at first, but there are a few ideas that Magaris, along with Don Fancher, principal of Deloitte, have discovered.

Old dog, more trust

The more one is exposed to something, whether it’s a person, concept, or even robot, the more one tends to trust it. That’s why we see a lot of celebrity advertisements– we implicitly trust supermodels we’ve encountered countless times more than a rando off the street, no matter how good they sell the product.

So, one way to improve trust in a technology is to simply expose more people to it.

Another way to improve trust in a technology is to already have a base of trust in the company that is proposing it. It may be a simple solution, but if a company has a strong image of trust and reliability, the technologies it produces will be more readily accepted by the public.

All in all, the most important thing when it comes to building trust in Artificial Intelligence is to have technology that works well and to know when to take time when developing those technologies. After all, it is best not to have to rebuild trust at all. It’s better to just have something that works the first time than something you have to fix, and AI is no different.

Love HTC?❤️

Follow RAISO (our parent org) on social media for more updates, discussions, and events!

Instagram: @Raisogram

Twitter: @raisotweets

RAISO Website:

https://www.raiso.org

Written by Molly Pribble, Arielle Michelman, and Hope McKnight

Edited by Dwayne Morgan