Hold the Code #17

🇺🇸 🇺🇸 🇺🇸

Hello and Happy Memorial Day!

In this week's edition, we cover news about how AI is being used to address disinformation, its role in the recent Gaza strip fighting, how non-tech companies are using AI, and, finally, how AI might lead to a brand new search engine.

We hope you enjoy this week's stories, as well as a restful conclusion to your long weekend.

Truth Hurts...but Disinformation is Worse

The implications of disinformation spread on social media have been a hot topic, especially during the COVID pandemic, but the spread of false information via social media campaigns can also have dangerous effects on election integrity, the reach of conspiracy theories, and democracy itself.

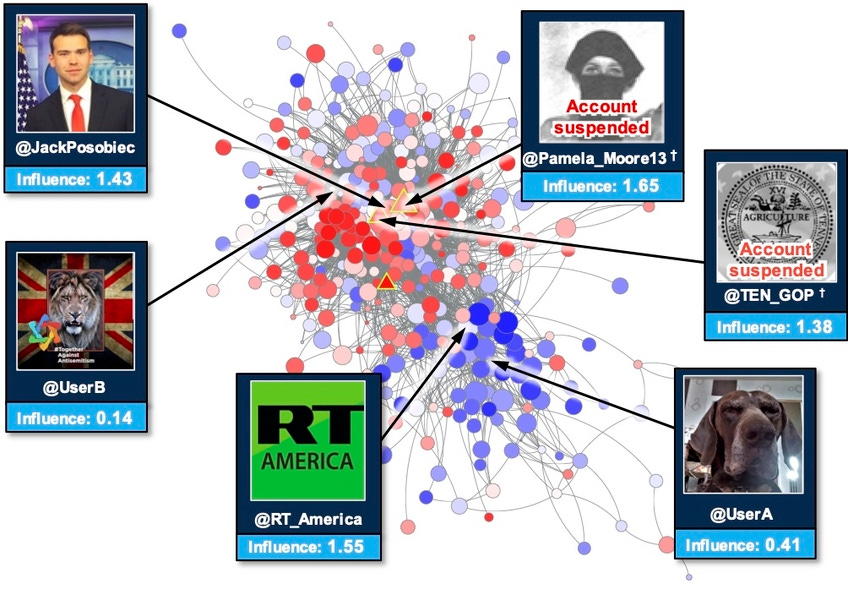

Building RIO

Members of MIT Lincoln Laboratory’s Artificial Intelligence Software Architectures and Algorithms Group (try saying that 3 times, fast) have been attempting to understand these campaigns through their Reconnaissance of Influence Operations (RIO) program, with the goal of developing a system to automatically detect disinformation within social media networks. The project started in 2014 and RIO was launched in 2017 to monitor social media leading up to the French elections that year.

RIO stands out from other similar systems by...

Using a number of novel statistical analysis and machine learning algorithms

Determining not only when an account is sharing disinformation, but how much that account then causes their broader social network to amplify this disinformation

Detecting disinformation from bot-operated and human-operated accounts (compared to other systems that only work on bot accounts)

Defending democracy

The RIO team intends for their system to be relevant even outside of the social media sphere, with applications in more traditional media (like newspapers or television news) as well as in government.

“Defending against disinformation is not only a matter of national security,” says Edward Kao, a PhD researcher in the Laboratory’s Lincoln Scholars program, “but also about protecting democracy.”

Learning more

To learn more, you can read the full article here. Additionally, The Great Hack is a documentary on Netflix that expands on the effects of social media campaigns on elections.

The First AI War?

During the recent conflict with Hamas in the Gaza Strip, the Israeli government relied heavily on artificial intelligence and supercomputing. Some, including a senior IDF Intelligence officer, have called it a "first-of-its-kind campaign...the first time artificial intelligence was a key component and power multiplier."

How was AI used?

IDF (The Israeli Defense Force) established an advanced AI technological platform that centralized all data on terrorist groups in Gaza Strip onto one system

Soldiers in Unit 8200 — the Intelligence Corps elite unit — pioneered algorithms that led to new programs called "Alchemist," "Gospel," and "Depth of Wisdom," which were used during the fighting in several ways

For example, "Gospel" used AI to generate recommendations for troops in the research division, producing targets for the Israeli Air Force to strike

What was the impact?

The military believes that using AI helped shorten the length of the fighting, having helped the IDF quickly and effectively gather targets using super-cognition.

While the IDF's Unit 9900’s satellites have gathered GEOINT (geographical intelligence) over the years, in this recent case of fighting, they were able to automatically detect changes in terrain in real-time. This meant that during the operation, the military was able to detect launching positions and hit them after firing.

Israel's use of AI also enabled them to successfully map most of Hamas's underground network through a large-scale intelligence-gathering process. Many military scholars concur that the ability of the IDF to crack Hamas’s network and completely map it removes one of the central dimensions of Hamas’s combat strategy.

Is warfare AI ethical?

Here are some reasons why we think it could be:

Streamlining missions and reduce conflict times, as demonstrated recently in the Gaza strip

Minimizing civilian casualties by leading to better, more precise strikes

And here are some opposing perspectives:

Giving unfair combative advantages to more powerful countries. (In this recent conflict, at least 243 Palestinians were killed during the fighting, including 66 children and teens, with 1,910 people wounded)

Could easily be seen as a perverted application of technology — doesn't necessarily make the world a better, more secure place

Either way, AI-war seems likely to become increasingly common.

What do you think of them?

Non-Tech Tech

When thinking of using AI in business, our minds don’t often move past the world of tech and Fortune 500 companies. Non-tech companies have struggled to get their AI programs past initial phases as only 21% of retail, 17% of automotive, 6% of manufacturing, and 3% of energy companies have successfully scaled their AI usage. But companies like John Deere and Levi Strauss are examples of how non-tech companies are beginning to utilize new technologies.

John Deere: AI was already used at the company in machines using large datasets and GPS coordination. But now, AI has advanced to reducing chemical usage by recognizing how to target weeds vs non-weeds and minimizing grain loss during harvest by optimizing automated control systems. Jahmy Hindman, the Chief Technology Officer of John Deere, says that it's the customer’s needs that drive innovation.

Levis Strauss: How did Levis manage excess inventory during the Covid-19 pandemic? Applying AI to price elasticity datasets. What was a small-scale program that grew to 17 countries in Europe and was used for 11/11 Singles’ Day in China? How did they manage? Louis DiCesari, the Global Head of Data, analytics, and AI at Levi Strauss said that they took the program to the next level by focusing on three things:

Actionable goals

An open mind to imperfect AI

Communication and feedback to products

There is still room for non-tech companies to enter into the world of AI. Hopefully, this can expand the success of not only the businesses themselves but also drive AI innovations by providing new perspectives from the non-tech world.

Could There Be A New Type of Search Engine?

How we search online has remained largely unchanged over the past decades. Could this be changing in the near future?

How search engines find answers

1998, Stanford. A couple of graduate students proposed a new algorithm called PageRank which calculates the relevancy of a web page according to how it is linked to other pages on the web. The algorithm was used to rank search results to a user's search query, and it became the backbone of Google.

Over the past decades, Google and other companies have continuously worked on improving the search experience, including developing AI solutions such as BERT to better process and respond to the user's search question. However, these innovations fall back onto the same foundation – a ranking system that answers the user's questions with a list of (what it considers as) the best answers.

"It’s as if you asked your doctor for advice and received a list of articles to read instead of a straight answer."

The ranking system does a good job – but what if there's a way to do better?

AI language models cast light on a new direction

What if, instead of giving the user a list of "most relevant" documents to read, the search engine can provide an answer synthesized from multiple relevant web pages, all in natural human language?

AI language models shine a light on a new direction search engines could take. Researchers at Google and beyond are now imagining a new approach – Instead of providing a list of web pages, the search engine behaves like a human expert, providing a synthesized, natural-sounding answer. Although development for this new approach is still in the early stages and raises multiple challenges, many are excited for the potential to completely transform the online search experience.

Read the full article here.

Written by Larina Chen, Molly Pribble, and Lex Verb