Have any thoughts or questions about generative AI? We’d love to hear them! Please send anything you’d like to share through our Google Forms here.

…and

Our Special Announcement!

Should human writers and artists be paid for training AI?

Sudowrite is an AI-based tool similar to ChatGPT. On its website, it advertises itself claiming that you can:

“Bust writer’s block with our magical writing AI.”

One of the many uses of ChatGPT is for creative writing, but other AI-based tools like Sudowrite that are specifically for “novel” or “screenplay” writing have since emerged as well. Have an idea for a story you want to write but can’t be bothered to actually write it? Give it to AI to write. Did the book or story that you just read end on a cliffhanger? Give it to AI and have them predict what comes next.

Not only do tools such as ChatGPT and Sudowrite raise essential questions about the definition of creativity, it also raises important legal questions on how the information used to “train” them. Large learning models that form the foundation of these tools are trained using the vast repositories of digital information on sites like Wikipedia and the web archive Common Crawl. Should companies pay writers if they use their work to train their algorithms?

Visual art

In the visual art space, legal action regarding unfair use of work is already taking place in UK courts. Stable Diffusion is an image-generating tool created by Stability AI that is similar to DALL-E and uses millions of images to be trained. Many of these images belong to Getty Images, the stock photo site with over 135 million copyrighted images, who accused Stability AI of illegally using their copyrighted content.

I believe that pieces of writing, art or music created by an algorithm should be subjected to the same copyright and intellectual property laws as humans. But others would argue that using AI to train from human-made works is the same as humans drawing inspiration from the works of others. Do we tell writers to stop reading the works of others in case they copy them?

Ultimately, we have to protect writers and artists from having their work misused, which will require new legal foundations revolving around generative AI.

What can’t ChatGPT do?

It’s easy to get over excited about conversational AI technology. But ChatGPT, like all technology, still has its limits.

Difficult to confirm information

Verifying the accuracy of ChatGPT answers takes effort. Unlike Google, which provides links and citations when a user asks a question, ChatGPT only responds in written text. This makes citing your sources difficult.

No recent data

ChatGPT’s training data only goes up until 2021, meaning that it does not have access to data past that year. Depending on what query you want answers for, this could become a big issue.

Wrong Answers

It’s important to remember that ChatGPT is brand-new technology, and its ability to generate answers should not be mistaken for the ability to generate correct answers. Stack Overflow, a popular question and answer website for computer programmers, banned ChatGPT this past month. They wrote that because the average rate of getting correct answers from ChatGPT is “too low,” the posting of answers created by ChatGPT is “substantially harmful to the site.” Another danger of this is that users find it difficult to detect when an answer is wrong, as most responses look like they could be correct answers. This prompts users to crank out answers from ChatGPT without verifying their accuracy.

For a program designed to help users find answers fast, those answers seem to come with lots of strings attached.

CheatGPT?

Tempted to use ChatGPT for inspiration? Don’t worry we have all been there. Cheating has grown far easier than ever with language processing AI that generates responses to almost any question. However, relying too heavily on ChatGPT can have a few unexpected repercussions .

Casual forgery

Let us consider the following hypothetical situation: A random student has a research paper due soon and decide to use ChatGPT to speed up the process:

The student types the following prompt into ChatGPT:

“I am doing a research project on ways people sleep on their couch. Could you provide an in-depth analysis of this and cite the most useful research?”

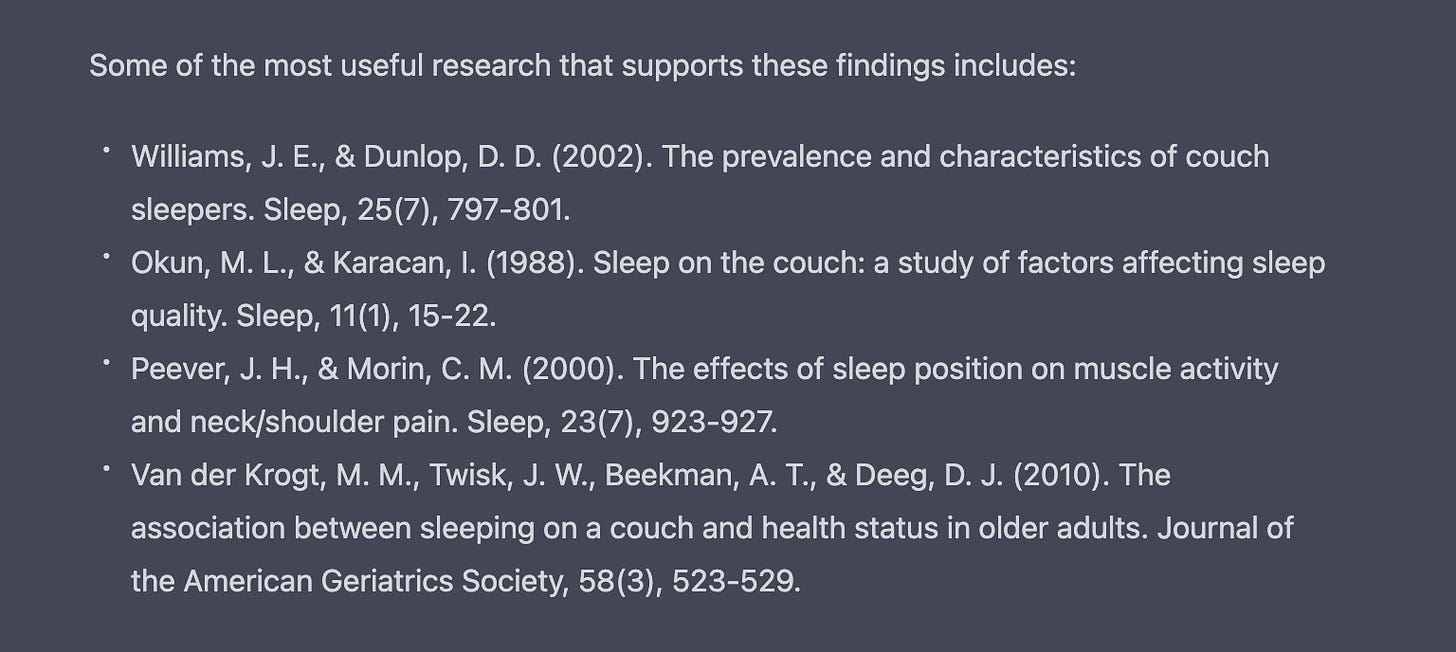

…and receives the following output for citations.

The made up citations are cited in proper formatting and the journals included are all real. Even more convincingly, these are real researchers who have research published online about sleep. At a first glance, would you even think they could be fake?

With a simple google search, one would find that these sources actually do not exist. Unfortunately, the student did not fact check the response, submitting these citations in their answer and being accused of falsifying research.

Prevention

While the prompt above is intended to be ridiculous, ChatGPT seems to find a logical response, expanding the scope to psychological research on the relationship between health and couch sleeping. With most prompts, in my experience, ChatGPT generally retrieves real research, but what risks do we take by blindly relying on this?

Currently, there are no proven methods to prevent ChatGPT from returning false citations. Furthermore, detecting writing from ChatGPT has become a difficult task for educational institutions across the country. Though there are a few algorithms that claim to be able to label ChatGPT’s responses, would they really be able to end this uprising in cheating?

Love HTC?❤️

Follow RAISO (our parent org) on social media for more updates, discussions, and events!

Instagram: @Raisogram

Twitter: @raisotweets

RAISO Website:

https://www.raiso.org